7 Essential Elements to A/B Test in Your Next Email Campaign

If you’re into email marketing, chances are you’ve come across the idea of email marketing A/B testing—or maybe you’ve even tried out a few tests on smaller parts of your emails.

But if you’re really aiming to boost your email marketing results, A/B testing goes way beyond small tweaks; it’s a powerful way to gather solid data on what actually clicks with your audience.

In today’s competitive marketing space, A/B testing is key for creating email campaigns that drive engagement and conversions, helping you truly understand what resonates with your readers.

A/B testing, sometimes known as split testing, is a technique that lets you experiment with different versions of your email elements to see which one performs best.

Essentially, by creating two or more versions of your email (A and B), each with a specific variation, you can test factors such as subject lines, CTAs, or even send times to see what encourages your audience to engage.

Email marketing A/B testing can provide actionable insights into audience preferences, helping you make data-driven decisions that increase open rates, click-throughs, and overall conversion rates.

At RD Marketing, we understand the importance of reaching your audience effectively. With services like our B2B data solutions and CTPS checker, we’re here to help marketers fine-tune their campaigns.

Using data-driven strategies, such as A/B testing, in combination with the right mailing lists or data cleansing practices, can elevate any email campaign to new heights of success.

Table of contents:

The 7 Essential Elements to A/B Test in Your Email Campaigns

A/B testing is most effective when applied to the right elements. Here, we’ll go through the seven critical components you should consider testing in your email campaigns, from subject lines to personalisation.

By experimenting with each of these elements, you can get clear insights into what drives the highest engagement and optimise your email marketing strategy.

Subject Line

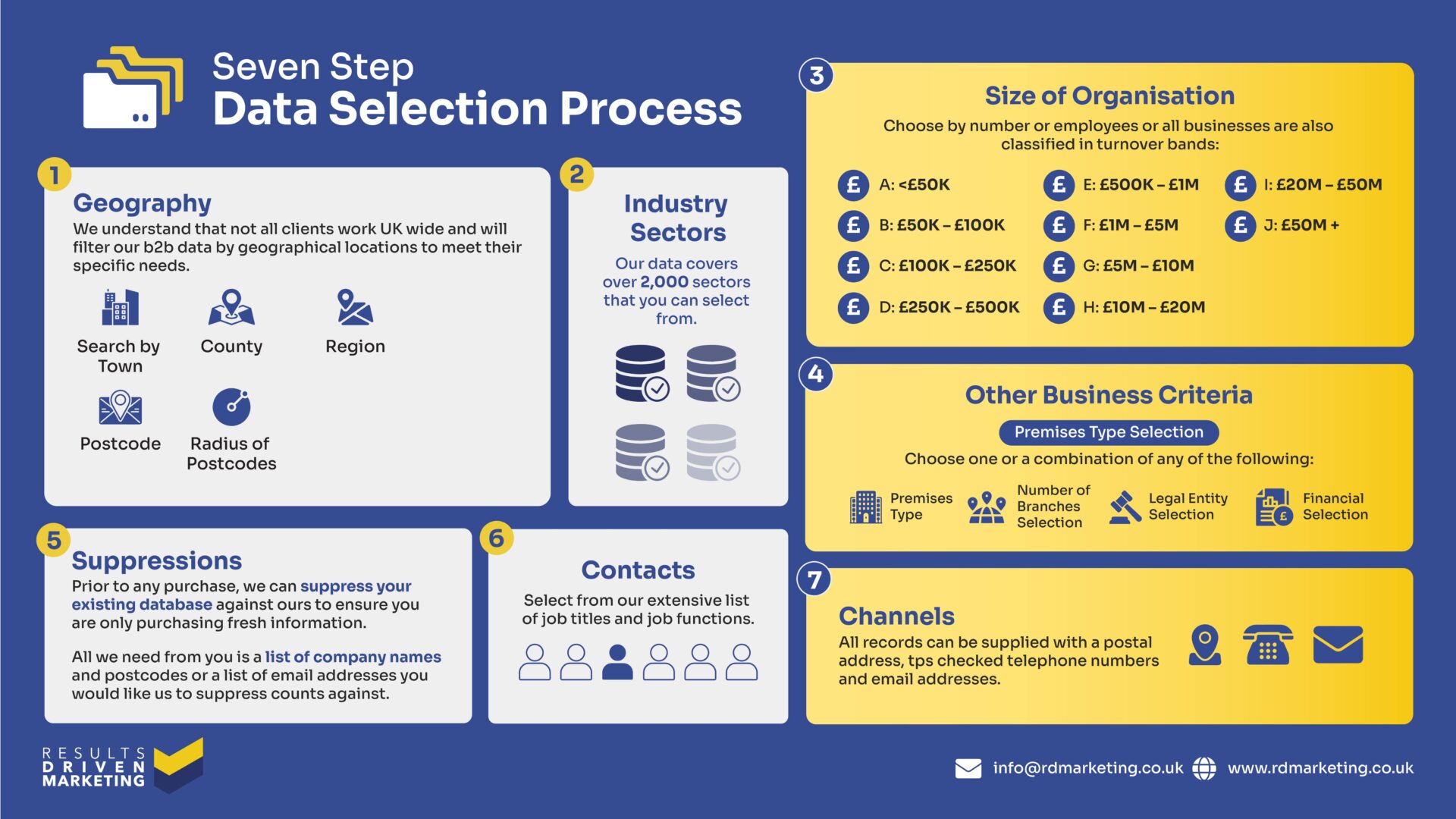

Subject lines are often the first (and sometimes only) chance to capture your reader’s attention, making them one of the most impactful elements to A/B test in email marketing campaigns.

Since subject lines directly impact open rates, even small changes can make a noticeable difference in performance.

Tips for A/B Testing Subject Lines:

- Question-Based vs. Statement-Based: Try testing question-based subject lines (e.g., “Want to Increase Your Open Rates?”) against statement-based ones (“Increase Your Open Rates with This Simple Trick”) to see what garners more curiosity.

- Length Variations: Test short, punchy subject lines against longer, more descriptive ones. Depending on your audience, a shorter subject may grab attention more effectively, or a descriptive line might give better context.

- Personalised vs. Generic: Personalised subject lines can make emails feel more targeted, but they may not always resonate with every audience. Test subject lines with the recipient’s name or company name against general options to see which approach performs better.

For email marketing campaigns that rely on high-quality B2B data, personalised subject lines often make a stronger impact, particularly if they’re tailored by industry or role.

Email Copy

The tone, length, and structure of your email copy are crucial elements that can heavily influence engagement. A/B testing various copy formats helps determine which style resonates best, especially if you’re sending emails to diverse audiences.

How to A/B Test Email Copy:

- Tone of Voice: Experiment with formal versus casual language to see what resonates more. Some audiences might appreciate a friendly, conversational tone, while others may prefer a straightforward, professional approach.

- Bullet Points vs. Paragraphs: For more complex messages, try testing a bulleted format against traditional paragraphs. Bullet points make the email content easier to skim, which can appeal to busy recipients.

- Call-to-Action Variations: Within the email body, test multiple CTAs (e.g., “Learn More” vs. “Get Started”) to identify which phrasing encourages clicks. CTA placement and repetition within the body are also worth testing to maximise response rates.

For example, our Email Address List Data service could be promoted through direct, clear CTAs or a more conversational invitation, depending on audience preferences.

Images and Visuals

Visuals can make emails more engaging but can also distract if not used effectively. Testing visuals allows you to find the right balance between text and imagery, ensuring that your email is visually appealing without overwhelming the reader.

Best Practices for A/B Testing Visuals:

- Image Placement: Try testing emails with images at the top versus within the body to see which placement garners more engagement.

- Type of Visuals: Test photos versus illustrations or icons. Depending on the message, illustrations may communicate ideas more effectively, while photographs can create a more personal feel.

- With or Without Images: Some audiences may respond better to simple, text-based emails, while others engage more with image-rich designs. Testing emails with and without visuals can help determine which format works best for specific campaigns.

If you’re running campaigns targeting industries with international reach, testing visuals might yield different preferences based on regional design trends.

Call-to-Action (CTA)

The CTA is arguably the most critical part of your email—it’s what drives readers to take action. Optimising your CTA through A/B testing can make a noticeable difference in click-through rates.

A/B Testing Ideas for CTAs:

- Text Variations: Try phrases like “Learn More,” “Get Started,” “Shop Now,” or even “Try for Free.” Different audiences may respond better to certain wordings, depending on the context of your email.

- Color Choices: Colors have psychological effects, so test various button colors to see which ones drive more clicks. For instance, orange or red buttons might catch attention, while green can symbolise “go” or “start.”

- Placement: Try positioning your CTA button in different areas, such as after a paragraph or at the end of the email. Repeating the CTA in several places can also help encourage clicks without overwhelming the reader.

Our Telemarketing Data service, for example, could benefit from a straightforward “Request Data” CTA that’s clear, prominent, and enticing.

Send Time and Day

Timing is crucial in email marketing. Your audience’s engagement can vary significantly depending on when they receive your email, making the send time and day a valuable element to A/B test.

Recommendations for Send Time A/B Testing:

- Weekday vs. Weekend: If your audience is primarily professionals, weekday emails may perform better, but testing a Saturday or Sunday send can reveal if there’s potential for weekend engagement.

- Morning vs. Afternoon: Some recipients engage more during the morning, while others prefer afternoon or evening emails. Test sending emails at different times to find out when your audience is most responsive.

With targeted campaigns, such as those promoting our Consumer Data, send times could make a substantial difference in performance.

Personalisation Elements

Personalisation can make a big difference in email marketing, but not all personalisation tactics work for every audience. A/B testing different levels of personalisation lets you find the optimal balance for your campaigns.

Personalisation Strategies to Test:

- Name Inclusion: Test subject lines and greetings that include the recipient’s name to see if this minor personalisation increases engagement.

- Segmentation-Based Personalisation: Personalise emails based on industry, job title, or location. For instance, you might refer to specific needs or industry pain points, showing recipients you understand their unique challenges.

Using segmentation with services like Data Cleansing Services to refine your list can further enhance personalisation efforts, leading to a more targeted campaign.

Email Design and Layout

The design and layout of your emails impact how easy it is for recipients to consume content. Testing different templates can help you determine which design formats lead to higher engagement.

Ideas for A/B Testing Layouts:

- One-Column vs. Multi-Column: Single-column designs are simple and easy to read, while multi-column layouts can present more information in a structured way. Test both to see which style your audience prefers.

- Balance of Text to Images: Test different proportions of text and images to find the ideal balance. Some audiences may prefer more visuals, while others engage more with text-heavy designs.

- Mobile-Friendly Adjustments: With the growing mobile user base, it’s essential to ensure emails are optimised for mobile viewing. Test layouts that emphasise mobile readability for a seamless user experience.

For complex offerings, like our Email Marketing Management Services, a clean, single-column layout might help ensure clarity, while multi-column designs might work better for B2B clients seeking detailed information.

Best Practices for A/B Testing in Email Marketing

Implementing effective A/B testing in your email marketing requires careful planning and a clear approach.

Here, we’ll walk through some key best practices to help you get the most accurate, actionable insights from each test, ensuring that every experiment contributes meaningfully to your email marketing strategy.

Set Clear Goals and Define Success Metrics

Before jumping into an A/B test, it’s crucial to define what you want to achieve. Are you looking to increase open rates, boost click-through rates, or drive more conversions?

Having a specific goal for each test not only keeps the experiment focused but also makes it easier to measure the impact of the changes. Common goals in email marketing A/B testing include:

- Increasing Open Rates: Often tested with subject line variations.

- Boosting Click-Through Rates (CTR): Testing CTA placement, wording, and visual appeal.

- Enhancing Conversion Rates: Experimenting with email copy, CTAs, and personalised content.

Once you’ve established a goal, decide on the success metrics you’ll use to measure it. For example, if the goal is to increase click-throughs, your main metric should be CTR. Defining these metrics helps you evaluate each version accurately and draw clear conclusions.

If you’re promoting high-value services, like B2B data or data enrichment services, a conversion-focused approach may be more appropriate.

Test One Element at a Time for Accurate Results

To obtain clear, reliable data, focus on testing just one element per A/B test. By isolating variables—like testing only the subject line or only the CTA—you can be confident that any changes in performance are due to the specific variation you’re testing.

For instance, if you’re testing an email for our Direct Mail Data services, and you want to know whether changing the CTA wording improves engagement, avoid altering the subject line in the same test.

Testing multiple elements in one go, known as multivariate testing, can lead to mixed results and make it harder to pinpoint which change was effective.

Some elements to test individually include:

- Subject Line Variations: Short vs. long, personalised vs. generic.

- CTA Button Text and Color: Simple variations like “Learn More” vs. “Start Now.”

- Email Design and Layout: One-column vs. multi-column.

By isolating these elements, you can gain more actionable insights and make informed decisions about your next email marketing steps.

Ensure Statistical Significance and Use Adequate Sample Sizes

To draw valid conclusions from your A/B test results, it’s essential to aim for statistical significance. In simple terms, this means gathering enough data to be confident that your results aren’t just due to chance. Here’s how to approach this:

- Choose an Adequate Sample Size: The size of your email list will impact how many people you need to include in each test group. For larger lists, a smaller percentage of recipients can give you statistically significant results, whereas smaller lists may require testing a larger portion.

- Track Results Over Time: To reach statistical significance, you may need to run tests for a set period or until you have a substantial amount of data. For instance, if you’re testing an email campaign for international email lists, it’s important to gather results across different regions and times to ensure accuracy.

If statistical analysis seems complex, many email marketing platforms offer tools to help calculate sample size and significance automatically, or you can use online calculators. Investing time to ensure significance helps you make decisions confidently, knowing your data is robust.

Document and Analyse Results for Continuous Improvement

After each test, document your findings and analyse what worked and what didn’t. Recording data like open rates, CTRs, and conversion rates from each test provides valuable benchmarks for future campaigns. Additionally, maintaining a record allows you to build on past experiments and continuously refine your email marketing strategy.

When reviewing your results, consider the broader patterns across multiple tests. For instance, if certain CTAs consistently drive higher click-throughs or if emails with specific design layouts improve engagement, these insights can shape your ongoing approach.

Documenting results from various tests—such as those for Telemarketing Data or consumer data campaigns—can also support decision-making across different product lines.

Implement a Systematic Testing Schedule

To maximise the benefits of email marketing A/B testing, establish a regular testing schedule. Consistent testing keeps your marketing strategy adaptive and responsive to audience preferences.

Whether it’s testing weekly emails or quarterly campaigns, a structured approach helps you maintain a steady flow of insights, leading to incremental improvements over time.

By following these best practices, your A/B testing can reveal valuable insights that not only improve individual campaigns but also guide the development of your entire email marketing strategy.

Remember, A/B testing is a journey—it’s about continually refining your approach to create campaigns that resonate more deeply and drive real results.

How to Analyse and Apply A/B Testing Results

After running an A/B test, the next step is to dive into the data and uncover what it can teach you about your audience’s preferences and behavior.

Careful analysis not only helps you understand which version performed better but also equips you with insights to fine-tune future email campaigns. Let’s look at some practical steps to effectively gather, interpret, and apply A/B testing results in your email marketing strategy.

Gather Your A/B Test Data

Once your test concludes, start by collecting all relevant data points, such as open rates, click-through rates (CTR), conversion rates, and any other metrics that align with your goals. Depending on the element you tested—like subject lines or call-to-action buttons—different metrics will be more relevant.

For example:

- Open Rates are ideal for testing subject lines and send times.

- CTR helps evaluate CTA button text or placement within the email.

- Conversions offer insights for tests focused on email content, layout, and personalisation.

To streamline data gathering, consider using an email marketing management platform that provides built-in analytics and reporting features, like our Email Marketing Management Services. Such tools can automatically display the performance of each test version, helping you save time and focus on analysis.

Compare Results to Identify Patterns

Once you have your data, it’s time to start analysing. Begin by comparing the performance of each test version against your predefined success metrics. For instance, if your goal was to increase CTR by testing CTA colors, look for a significant difference between the click-through rates of each version.

If the green CTA performed better than the red, that’s a clear indicator of your audience’s preference.

Some practical tips to make the most of your data analysis:

- Look for Consistent Patterns: If certain elements perform better across multiple tests (e.g., shorter subject lines consistently leading to higher open rates), these patterns can guide future campaigns.

- Identify Audience Segments: For campaigns aimed at specific audience types, such as those using our Telemarketing Data or International Email List, examine how different segments responded. Some groups may prefer more personalised subject lines, while others are more responsive to particular CTA styles.

By analysing these trends, you can begin to understand what your audience truly values and tailor your content accordingly.

Use Insights to Refine and Segment Future Campaigns

The real value of A/B testing lies in applying your findings to optimise future campaigns. Use the insights you’ve gathered to create more segmented, targeted emails that cater specifically to the preferences revealed in your testing. For example:

- Segmented Campaigns Based on Preferences: If your audience responds differently to formal versus informal language, consider segmenting your list. You could send formal emails to more traditional businesses using our B2B Data while reserving a conversational tone for companies in creative industries.

- Refined Personalisation Techniques: Personalisation is a powerful tool, but knowing exactly what type of personalisation works best for your audience is crucial. If name-based personalisation in subject lines boosts open rates, apply this across future emails, especially in campaigns targeting consumer data lists.

- Adapted Content and Timing: Suppose your testing shows that emails sent on Tuesdays achieve higher engagement than those sent on Fridays. Schedule future campaigns accordingly, tailoring send times based on audience preferences.

Testing can also help you ensure compliance and reachability for specific segments, like those in our CTPS checker database, optimising your targeting while avoiding restricted contacts.

Document Results for Continuous Learning

A/B testing is an ongoing process that yields increasingly accurate insights over time. To make the most of it, document each test and note the results.

Keeping a record allows you to build a repository of insights that can be referenced and expanded as your email marketing strategy evolves.

Key points to document include:

- The test element (e.g., subject line, CTA placement, etc.).

- The different variations tested.

- Results for each variation (CTR, open rate, etc.).

- Audience segment data, if applicable.

With each new test, you’ll gather more data to inform future campaigns. For example, insights from a test on Direct Mail Data promotions could be invaluable when launching similar campaigns for other data services, like data enrichment or data cleansing.

Apply Results to a Broader Email Marketing Strategy

Once you’ve gathered, analysed, and documented your A/B test results, apply these insights strategically across your email marketing campaigns.

A/B testing can refine not only individual emails but also overarching strategies, ensuring each aspect of your email marketing is optimised for maximum engagement.

Implementing these best practices will help you unlock the full potential of email marketing A/B testing, allowing you to continuously refine your approach, drive higher engagement, and achieve more impactful results.

For more insights and tools to elevate your email marketing strategy, explore our full range of data services at RD Marketing, where our B2B data, email list data, and advanced segmentation tools can support and enhance every stage of your campaigns.

Conclusion

A/B testing has become an invaluable asset in email marketing, allowing marketers to pinpoint exactly what works best to engage and convert their audience. By testing and refining essential elements—from subject lines and CTAs to personalisation and design—marketers can continuously improve their campaigns, ensuring every email sent is better optimised to meet its goals.

With strategic email marketing A/B testing, each campaign becomes a valuable learning experience, providing insights that not only enhance current campaigns but also build a foundation for future success.

As you look to refine your own email marketing efforts, start by testing just one or two elements discussed here, like subject lines or CTAs.

Watch how even small changes in wording, layout, or send time can shift the performance needle. Over time, with consistent testing, you’ll develop a set of data-backed strategies that cater to your unique audience preferences, maximising both engagement and ROI.

At RD Marketing, we’re here to support your A/B testing journey with services tailored for optimised email marketing, from B2B data to advanced data enrichment services.

Whether you’re looking to segment audiences with consumer data, verify contacts with our CTPS checker, or run campaigns on targeted international email lists, our data solutions are designed to help you connect more effectively with your audience.

So, as you set out to A/B test and refine your next email campaign, explore our comprehensive range of email marketing tools and services that can help make your testing process even smoother.

Visit RD Marketing to see how we can assist in elevating your campaigns—whether it’s through precise email address list data, data cleansing, or our email marketing management services. Let’s take your email marketing A/B testing and overall strategy to the next level together.

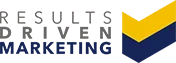

Who are we?

Thinking about “how do I buy data“?

Providing b2b database solutions is our passion.

Offering a consultancy service prior to purchase, our advisors always aim to supply a database that meets your specific marketing needs, exactly.

We also supply email marketing solutions with our email marketing platform and email automation software.

Results Driven Marketing have the best data of email lists for your networking solutions as well as direct mailing lists & telemarketing data in telemarketing lists

We provide data cleansing and data enrichment services to make sure you get the best data quality.

We provide email marketing lists and an international email list for your business needs.

At RDM We provide b2c data as we have connections with the best b2c data brokers.

A good quality b2b database is the heartbeat of any direct marketing campaign…

It makes sense to ensure you have access to the best!

Call us today on 0191 406 6399 to discuss your specific needs.

Results Driven Marketing

0191 406 6399